Bit of an open question - has anyone on here had experience connecting Matillion to ERwin Data Modeler either via ODBC or API to query the underlying model metadata? If so, I'd be keen to fire a few further questions at you.

Hi @Treaders.

I haven't used any modeler or anything against Matillion but I have connected to the Matillion DB via Postgres connection to check out the data and how it's stored. I didn't do anything with it other than looked around since it's not the supported method for reading data. We have spent a fair amount of time around the API though in the form of building automations and processes using the Matillion API.

@Treaders I have just completed my basic, intermediate and advanced course on Matillion and I do have a requirement to connect Matillion to Erwin data modeler tool. Is there some sort of analysis that you have done and would be ok to share?

Hi Pallavi,

I have successfully connected to Erwin from Matillion making use of the Erwin web portal, examples job of using the API to return all Tables and Columns within certain models is detailed below. Additional Web Portal API Dev info can be found here;

http://xxxxxxxxxx:11680/MMDoc/#/

http://metaintegration.com/Products/MIMM/REST-API/generalInfo.html#APIKey

I created an API Extract profile with a POST request to the following using the executeMQLQuery endpoint for testing. Don't use API Query Profile as there is currently a bug with Matillion not accepting "-" in Header parameter values, .i.e. "Content-type".

URL:

http://>ipaddress:11680/MM/rest/v1/entities/executeMQLQuery

First, you need to generate a login token. To authenticate you need to pass in an API Key-Value pair token generated by the /auth/login endpoint. You can generate this using one of the following;

1) Login to http://xxxxxxxxxx:11680/MMDoc/#/Authentication/resource_Authentication_loginPost_POST. Copy the value and add it as you’re setting up your Extract Profile for testing.

2) Use python, cURL or Postman to hit the following end point. Copy the value and add it as you’re setting up your Extract Profile for testing.:

Second, enter header parameters;

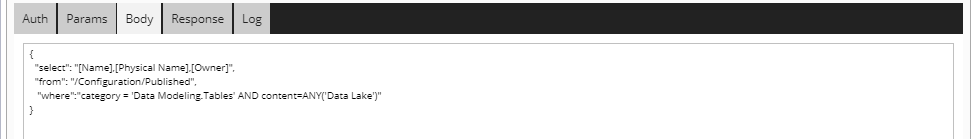

Third, enter relevant BODY that will form the MQL query, example below:

That should be enough to set up your profile. Once you have created your Extract profile you can use an API Extract component in an orchestration job, example below (please note this flows from right-to-left);

As the authentication token is temporary, I need to generate a new token with each run. I use a python script and write this value to a job variable, illustrated below;

The next API Extract components then connect to the aforementioned Extract Profile (selected in API Property). In the API Key Value properties I resolve the job variable that holds my authentication token. I pass in the body request which is formed of MQL hitting the executeMQL endpoint. More info on MQL - http://metaintegration.com/Products/MIMM/REST-API/mql.html

This component writes out the response into a variant column type in a target table.

The next step was the Transform jobs. The response structure isn’t the greatest, which means I couldn’t use the Flatten component in Matillion. I’ve written custom SQL (I use Snowflake) using the lateral flatten function a couple of times, and then a case statement to transpose the response. There maybe a better way to perform this transpose but I couldn’t use the Pivot function or any component in Matillion as there is no aggregate column.

Final python component then just ends the Erwin session.

Hope this helps. Shout if you have any other questions.

@Treaders - I could be asking a silly one, but can you share what your end goal was? We are in process of gathering requirement from the client, and I am still not certain what exactly they want to do by getting Matillion connected to Erwin. One thought was (yet to confirm), that client would like to "view" the warehouse tables/log/audit tables through a user friendly tool, which in this case is Erwin -- I could be wrong, but this is just a start.

I do want to sound dumb when talking to the client (I would not mind it here thought :-) ), hence trying to understand what your requirement for this connection was.

Thanks @Bryan . From what I can see, the ERwin Data Modeler module doesn't have a rest API, only a script/com based API framework so need something like Visual Basic to execute. ERwin's preferred method of accessing the metamodel is through the API and not ODBC so hoping to find someone who has conjured up something similar utilising Matillion.

Just out of curiosity, what processes have you developed utilising the Matillion API?

Thank you for this information. I would check on it and get back in case I get stuck.

Thanks for the info on ERwin Data Modeler @Treaders. I used the ERwin Modeler years ago and I am wondering what benefit the ERwin Modeler would have as it pertains to Matillion itself? I am wondering if I am missing something clever you are trying to use it for.

Most of our API projects were around automating Matillion instance upgrades/migrations. The API projects we have done is quite long but here are the ones I remember off the top of my head:

- Our own migration tools to migrate from one server to the next

- Leveraged a piece of that functionality to do deployments from one instance to another since our Matillion instances are in 2 different AWS accounts that are completely separate and have no connectivity between them.

- Used the API to offload Matillion logs from Matillion to Snowflake where we store all logging information about Matillion and Snowflake and then serve it up in a Power BI dashboard.

- Created a Shared Job that I believe is in the Shared Job Exchange that uses the API to determine if any jobs are running on the instance at a given time.

Like I said, there is probably more but those are the big ones. Thanks for sharing!

Hi Bryan,

Thanks for sharing the above.

I'm looking to extract the metadata model and persist the information in a Governance schema in Snowflake that holds information on our datalake and warehouse objects which can be surfaced through PowerBI. I will also investigate to see how/if I can create a metadata-driven ELT approach in Matillion using this information.

Cheers, Jase

Hi @Pallavi, our PoC requirement was to try create a metadata drive pipeline using metadata persisted in Erwin, mostly stored in UDPs. A secondary requirement was to collate the metadata in a governance schema so we can try get end-to-end lineage across our data platforms.

Hi @Treaders, from what I know of and seen of the Postgres or MongoDb databases that Matillion uses, there isn't much of value as it pertains to metadata. Not to mention you would be chasing their changes. Literally every version there is a schema change of some kind. The other downfall to it is that you would be putting an extra load on the database that it was not designed for every time you run queries directly against the DB. This can effect the performance of your Matillion instance depending on the existing load. Matillion only supports the usage of their API's for getting data from the database. This allows them to maximize performance for a single point of usage and always supports the customers' usage of the API whether Matillion's schema drastically changes or not. This probably doesn't help much in your scenario since you are in uncharted and unsupported territories but you could try to ingest API metadata and get a but further.